US Government AI Guidelines in Healthcare: Ethical Use Principles & Recommendations

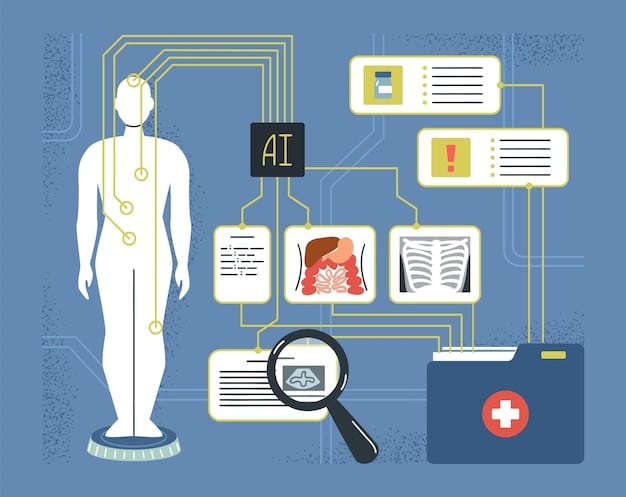

The US Government has released new guidelines on the ethical use of AI in healthcare, establishing key principles and recommendations to ensure responsible and beneficial implementation of artificial intelligence technologies.

The landscape of healthcare is rapidly evolving, with artificial intelligence (AI) emerging as a transformative force. To navigate this complex terrain, the US Government has releases new guidelines on the ethical use of AI in healthcare: key principles and recommendations. These guidelines aim to harness the potential of AI while mitigating risks, ensuring patient safety, and upholding ethical standards.

Understanding the US Government’s AI Healthcare Guidelines

The US Government’s new guidelines on AI in healthcare represent a pivotal moment in the intersection of technology and medicine. These guidelines are not just a set of recommendations; they are a comprehensive framework designed to ensure that the integration of AI into healthcare is both ethical and beneficial.

These guidelines provide a roadmap for healthcare providers, AI developers, and policymakers to navigate the complexities of AI in medicine. By establishing clear principles and recommendations, the government aims to foster innovation while safeguarding patient rights and promoting equitable access to care.

Key Objectives of the Guidelines

The primary objective is to promote the responsible and ethical development, deployment, and use of AI technologies in healthcare. Several secondary objectives include:

- Enhancing Patient Safety: Ensuring that AI systems are designed and used in a way that minimizes risks to patients.

- Promoting Equity: Addressing biases in AI algorithms and ensuring that AI-driven healthcare solutions are accessible to all, regardless of socioeconomic status or geographic location.

- Maintaining Transparency: Encouraging transparency in the development and deployment of AI systems, so that patients and healthcare providers understand how these systems work.

- Protecting Privacy: Safeguarding patient data and ensuring that AI systems comply with privacy regulations.

These objectives collectively aim to create a healthcare ecosystem where AI is used responsibly and ethically, leading to better patient outcomes and improved healthcare delivery.

In conclusion, the US Government’s AI healthcare guidelines are a proactive step towards ensuring that AI technologies are used in a manner that aligns with ethical principles and promotes the well-being of patients.

Core Ethical Principles Outlined in the Guidelines

At the heart of the US Government’s AI healthcare guidelines lie a set of core ethical principles designed to guide the responsible development and deployment of AI technologies in the medical field. These principles serve as a moral compass for healthcare providers, AI developers, and policymakers, ensuring that AI is used in a way that benefits patients and promotes equitable access to care.

These core ethical principles are not just abstract concepts; they are practical guidelines that inform decision-making at every stage of the AI lifecycle, from development to deployment and ongoing monitoring.

The Foundational Pillars

The core ethical principles are the foundations to create a healthcare AI and include:

- Beneficence: AI systems should be designed and used in a way that maximizes benefits to patients, improving outcomes and enhancing the quality of care.

- Non-Maleficence: AI systems should not cause harm to patients. This principle requires careful consideration of potential risks and biases in AI algorithms.

- Autonomy: AI systems should respect patient autonomy by providing them with clear information about how AI is used in their care and allowing them to make informed decisions.

- Justice: AI systems should promote equitable access to care, ensuring that all patients, regardless of their socioeconomic status or geographic location, benefit from AI-driven healthcare solutions.

Transparency is also a critical aspect of these ethical principles. Healthcare providers and patients need to understand how AI systems work, what data they are using, and how decisions are being made. This transparency builds trust and allows for informed consent, empowering patients to actively participate in their care.

In summary, the US Government’s commitment to these core ethical principles underscores its dedication to responsible AI innovation. By prioritizing beneficence, non-maleficence, autonomy, and justice, the guidelines aim to ensure that AI technologies are used in a manner that promotes the well-being of patients and upholds the highest standards of ethical conduct.

Specific Recommendations for AI Implementation in Healthcare

Beyond the overarching ethical principles, the US Government’s AI healthcare guidelines provide specific recommendations for the practical implementation of AI technologies in the medical field. These recommendations offer actionable guidance for healthcare providers, AI developers, and policymakers, addressing key challenges and opportunities in the AI healthcare landscape.

These specific recommendations clarify the expectations for responsible AI development, deployment, and monitoring. By aligning with these recommendations, stakeholders can cultivate an environment that fosters innovation while mitigating risks and ensuring ethical practices.

Key Recommendations

These include:

- Data Privacy & Security: Implementing robust measures to protect patient data and ensure compliance with privacy regulations such as HIPAA.

- Algorithmic Bias Mitigation: Addressing biases in AI algorithms by using diverse datasets and conducting thorough testing to identify and correct biases.

- explainability & Transparency: Ensuring that AI systems are explainable and transparent, so that healthcare providers and patients understand how decisions are made.

- Continuous Monitoring & Evaluation: Implementing processes for continuously monitoring and evaluating AI systems to ensure they are performing as intended and not causing unintended harm.

Practical Steps for Implementing AI

These strategies include:

- Providing comprehensive training programs for healthcare professionals on how to use and interpret AI-driven insights.

- Establishing clear lines of accountability for AI-related decisions and outcomes.

- Engaging patients in the development and deployment of AI systems to ensure their needs and concerns are addressed.

The US Government’s AI healthcare guidelines provide a detailed set of recommendations that promote the safe, effective, and ethical use of AI in healthcare. By following these recommendations, stakeholders can foster trustworthy AI technologies.

Addressing Potential Challenges and Risks

While AI holds immense potential to transform healthcare, its implementation is not without challenges and risks. The US Government’s AI healthcare guidelines acknowledge these potential pitfalls and provide recommendations for addressing them proactively.

These challenges and risks are inherent in the complexity of AI systems and the sensitive nature of healthcare data. It is crucial to recognize and mitigate these issues to ensure that AI is used responsibly and ethically in the medical field.

Common Challenges and Mitigation Strategies

The potential challenges:

- Data Bias: AI algorithms can perpetuate and amplify existing biases in healthcare data, leading to unequal outcomes.

- Lack of Transparency: Many AI systems are “black boxes,” making it difficult to understand how they arrive at decisions.

- Data Privacy Concerns: AI systems rely on vast amounts of data, raising concerns on data privacy.

To overcome these hurdles, the guidelines recommend several mitigation strategies:

- Diverse Datasets: Collect and use diverse datasets that reflect the populations being served to reduce bias.

- Explainable AI: Develop AI systems that provide explanations for their decisions so that healthcare providers can understand and trust them.

- Robust Data Security Measures: Implement robust data security measures to protect patient data and comply with privacy regulations.

Furthermore, the guidelines emphasize the importance of ongoing monitoring and evaluation of AI systems to identify and address challenges and risks as they arise. By continuously assessing the performance of AI systems and adapting them to the evolving healthcare landscape, stakeholders can ensure their effectiveness.

In conclusion, the US Government’s AI healthcare guidelines recognize the potential challenges and risks associated with AI implementation and propose strategies for mitigating them. By addressing data bias, lack of transparency, and data privacy concerns head-on, the guidelines create a secure environment for innovation.

The Role of Stakeholders in Implementing the Guidelines

The successful implementation of the US Government’s AI healthcare guidelines requires the active involvement of all stakeholders, including healthcare providers, AI developers, policymakers, patients, and advocacy groups. Each stakeholder group has a distinct role to play in ensuring that AI is used in a manner that benefits patients and promotes equitable access to care.

By collaborating closely and adhering to the principles and recommendations in the guidelines, stakeholders can collectively shape the future of AI in healthcare. This collaborative effort ensures that AI technologies stay aligned with ethical values and patient-centered goals.

Individual Responsibilities

Different stakeholders have their responsibilities:

- Healthcare Providers: Implementing AI systems responsibly, obtaining informed consent from patients, and monitoring outcomes.

- AI Developers: Designing AI systems that are ethical, transparent, and unbiased, adhering to industry best practices.

- Policymakers: Establishing clear regulations and standards for AI in healthcare, promoting data privacy and algorithmic transparency.

Building Collaborative Partnerships

Building effective partnerships among stakeholders is essential for successful AI implementation in healthcare. These are:

- Collaborating on data governance frameworks to standardize data collection, sharing, and analysis practices.

- Establishing multi-stakeholder advisory committees to provide input on AI policies and guidelines.

- Creating forums for sharing best practices and lessons learned in AI implementation.

The US Government’s AI healthcare guidelines are a tool for collaboration and innovation. By working together and remaining committed to shared ethical principles, stakeholders can harness AI’s transformative capabilities to enhance patient care, improve efficiency, and advance medical knowledge.

Future Implications and the Evolving AI Landscape

The US Government’s AI healthcare guidelines are not a static document; they are a dynamic framework that will evolve alongside the rapidly changing AI landscape. As AI technologies become more sophisticated and integrated into healthcare, it is crucial to continually assess and adapt the guidelines to ensure they are effective and relevant.

By proactively addressing future implications and staying ahead of technological advancements, we can harness AI to achieve better patient outcomes, enhance healthcare delivery, and promote medical knowledge.

Anticipating Future Trends

These evolutions include:

- Personalized Medicine: tailoring treatments to individual patients based on their genetic makeup, lifestyle, and other factors.

- Remote Patient Monitoring: AI-powered devices that track patients’ health remotely, enabling early detection of health issues and reducing the need for hospital visits.

- Drug Discovery: AI algorithms that analyze vast amounts of data to identify potential drug candidates and accelerate the drug development process.

Developing a Proactive Approach

As AI changes healthcare in the future, we must maintain:

- Continuous Evaluation: Implementing processes for continuously evaluating the performance of AI systems and adapting them to the evolving healthcare landscape.

- Stakeholder Engagement: Engaging patients, healthcare providers, AI developers, and policymakers in ongoing dialogue about the ethical and societal implications of AI in healthcare.

- International Collaboration: Collaborating with international partners to share best practices and establish global standards for AI in healthcare.

The US Government’s AI healthcare guidelines are a roadmap for the future. By proactively addressing future implications and integrating ethical considerations into AI’s progress, we can ensure that AI remains a tool for good, improving lives and promoting a healthier future for all.

| Key Point | Brief Description |

|---|---|

| 🛡️ Ethical Principles | Guidelines emphasize beneficence, non-maleficence, autonomy, and justice. |

| 🔒 Data Privacy | Recommendations include strong data protection and compliance with regulations like HIPAA. |

| 🤖 Algorithmic Bias | Guidelines aim to mitigate bias with diverse datasets and continuous testing. |

| 🤝 Stakeholder Roles | Healthcare providers, developers, and policymakers all have roles in implementing guidelines. |

Frequently Asked Questions (FAQ)

▼

The primary goal is to ensure the ethical and responsible development, deployment, and use of AI in healthcare. This includes patient safety and equitable access.

▼

The guidelines recommend using diverse datasets and conducting continuous testing to identify and correct biases. This ensures fairer outcomes.

▼

Healthcare providers are responsible for implementing AI systems ethically, obtaining informed patient consent, and monitoring the outcomes of AI use.

▼

The guidelines recommend employing robust data security measures and adhering to regulations like HIPAA to protect sensitive patient information.

▼

The guidelines anticipate trends of personalized medicine, remote monitoring, and drug discovery. The proactive approach includes adaptability and stakeholder dialogue.

Conclusion

In conclusion, the US Government’s new